craft cms inside of laravel homestead on windows 10 using wsl

I’m jotting this down for future Micah because there are a couple of things in this process that weren’t obvious to me despite at least a couple of tutorials going through the process of installing Craft CMS from scratch using Laravel Homestead on Windows 10. I wanted to include Windows Subsystem for Linux (WSL) so this goes a little further.

I will mirror a similar tutorial that has a lot of the same steps but I will highlight on my pain points because of using the Bash script.

Set Windows up

Let’s get Windows set up first. Configure and install the following below, or use the commands below this list.

Use Powershell for these commands.

Install Chocolatey

Set-ExecutionPolicy Bypass -Scope Process -Force; iex1Install Virtualbox and Vagrant

cinst virtualbox vagrantInstall the Homestead Vagrant box

vagrant box add laravel/homesteadThis will take a while, possibly more than an hour if your internet speed is low. I’d suggest you keep this running the background in another terminal window while you take care of the rest of this. If you haven’t already installed WSL below, I’d wait to run the above command until you reboot Windows.

Install WSL

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Windows-Subsystem-LinuxWSL made this process a little more challenging because Bash inside of Windows is not yet a first class citizen when it comes to traversing the operating system. Within the Ubuntu distro, there are packages that need to be added.

Use Bash on WSL for these commands.

Check what’s already installed in WSL

apt list --installedInstall what you don’t have from the following.

Update packages list

sudo apt-get updateInstall PHP latest version and verify it installed

sudo apt-get install php

php -vInstall PHP CURL

sudo apt-get install php-curlThis fixed an error I kept getting:

craftcms/cms [version number] requires ext-curl * -> the requested PHP extension curl is missing from your system.Install Composer and move into global path

php -r "copy('https://getcomposer.org/installer', 'composer-setup.php');"

php -r "if (hash_file('sha384', 'composer-setup.php') === 'a5c698ffe4b8e849a443b120cd5ba38043260d5c4023dbf93e1558871f1f07f58274fc6f4c93bcfd858c6bd0775cd8d1') { echo 'Installer verified'; } else { echo 'Installer corrupt'; unlink('composer-setup.php'); } echo PHP_EOL;"

php composer-setup.php

php -r "unlink('composer-setup.php');"

sudo mv composer.phar /usr/local/bin/composerVerify everything is installed so far, now it’s time to get through the rest of the installation process.

Use Bash on WSL for the rest of this.

Homestead configuration

Note: I am approaching this as a global installation because it allows Craft to stay encapsulated from the web server that Homestead creates. It also allows you to use Homestead for multiple projects for the same Homestead environment.

Create projects directory or use existing one; this is where your web projects live.

C:\Users\[username]\Sites\Above is an example on my OS where my web projects live. Now let’s go in there and create the Homestead container directory for your Craft projects.

cd C:\Users\[username]\Sites\

mkdir [homestead_container] && cd [homestead_container]where [homestead_container] is the name of the company or project. This directory will contain two sub-directories, one for the Craft repository files and the other for vendor files containing Homestead.

You should now be in the following directory shown in Bash:

/mnt/c/Users/[username]/Sites/[homestead_container]Continue using Bash on WSL for the following commands in the current directory.

Install Homestead

composer require laravel/homesteadGenerate Vagrantfile and Homestead files

php vendor/bin/homestead makeInstall Craft

Install Craft (for new installations)

composer create-project craftcms/craft [Path]where [Path] is the name of the sub-directory containing Craft. I just called it craft.

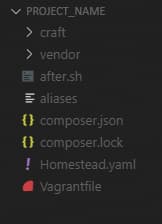

Your directory structure will probably match this screenshot

Configure Homestead

Open Homestead.yaml in your editor. As of Homestead 9.0.7, you’ll see the following generated code in the Homestead file.

ip: 192.168.10.10

memory: 2048

cpus: 2

provider: virtualbox

authorize: ~/.ssh/id_rsa.pub

keys:

- ~/.ssh/id_rsa

folders:

-

map: ~/code

to: /home/vagrant/code

sites:

-

map: craft.test

to: /home/vagrant/code/public

databases:

- homestead

features:

-

mariadb: false

-

ohmyzsh: false

-

webdriver: false

name: [homestead_container]

hostname: [homestead_container]This is where I started with my confusion. I’ll highlight only a few lines that might cause issues.

ip: 192.168.10.10Most of the time, this won’t be an issue. If your internal network, or LAN, is using a network in the range of ip: 192.168.10.x, you’ll have to change the last two ranges of the IP address so that it’s not starting with 10.

As long as the above is valid, the next issue to verify is that your firewall won’t prevent the localhost from starting. Sometimes firewalls can be aggressive and Windows Defender, VPNs like NordVPN, PIA, Mullvad and others, or Antivirus applications can all prevent localhost connections from being made. Each has its own IP filtering that you should make sure isn’t getting in the way. If there is a firewall blocking localhost, you might get a general failure when you ping the above IP address. This has bitten me before!

Inside of folders: and sites:, let’s make updates.

folders:

-

map: C:/Users/[username]/Sites/[homestead_container]

to: /home/vagrant/code

type: "nfs"The first major thing I got stuck on is how to update the map value above. In this section, map is the local directory that contains your project and to is a directory inside of the Vagrant VM that mirrors the local machine. It’s syncing both ways, so you can make changes locally in your editor or you can SSH into Vagrant and make edits on the mirrored files and they will automagically stay in sync.

We can’t use this UNIX-based directory structure syntax since this is Windows

/mnt/c/Users/[username]/Sites/[homestead_container]We have to use the inherent Windows structure syntax

C:\Users\[username]\Sites\[homestead_container]EXCEPT for some reason the backward slash must be converted to forward slash!

C:/Users/[username]/Sites/[homestead_container]When running the Vagrant virtual machine and trying to use my browser to go to the domain name specified inside of this file, all that would come up in the browser window

No Input File SpecifiedI couldn’t find any documentation on what was going on until I studied this Homestead installation tutorial and saw his use of the forward slash. It was such a small nuance that was easy to overlook!

Laravel briefly touches on the inclusion of NFS, but after a little research, I found that including NFS is a good idea for speed optimization. With that, we need to add NFS support to Vagrant in Windows.

vagrant plugin install vagrant-winnfsdUsing Homestead on Windows will probably be slow. Using it with WSL will probably be just as slow. When I say slow, it can take the browser anywhere from just a few seconds to anywhere up to 20-30 seconds just to start loading the asset files like CSS and JS. My average is about 7-10 seconds to get to that point. That’s really bad and I hope this improves as I learn more. With this in mind, there are some tweaks that can be made to speed up Vagrant inside of [homestead_folder]\vendor\laravel\homestead\scripts\homestead.rb with regard to NFS.

Look for a line that looks like the following:

mount_opts = folder['mount_options'] ? folder['mount_options'] : ['actimeo=1', 'nolock']The last two array items are two mount_options and the article on speeding up Vagrant shows more possible options that you can add to that array to help speed up access and read times.

This whole section took a week to wrap my head around so I’m glad I could document it for others using Windows!

Let’s continue.

Map the updated domain name to the correct public directory inside of craft

sites:

-

map: craft.test

to: /home/vagrant/code/craft/webChange map to your preferred domain name. I’d suggest not using .dev for anything since Google now owns this and it can resolve on the regular internet. This is why I kept .test.

to needs to read the public directory inside of Craft. Older versions of Craft show a public directory outside of the craft folder. The latest versions of Craft 3 show the public directory as web inside of the Craft folder.

Finally, update the database

databases:

- craftIf you didn’t already install vagrant-hostmanager, you’ll need to update your HOSTS file so that the virtual host name will resolve in the browser. Otherwise, vagrant-hostmanager is smart enough to do this for you.

192.168.10.10 craft.testOptionally, I recommend enabling MariaDB for MySQL. It’s more efficient in several ways, enough so that it’s worth enabling every time.

mariadb: trueTurn on Homestead

Run Vagrant

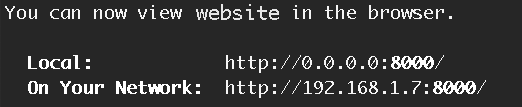

vagrant upThis will take a few seconds to provision everything with the virtual machine and get its contained server up and running. Once completed, go to your browser and let’s go to the URL.

http://craft.test/If everything was set up correctly, you should now see a 503 error that indicates the database isn’t set up correctly. If you don’t see something like a 503 error, or if you see the same thing I got earlier saying No Input File Specified, revisit the above sections to make sure everything was updated correctly.

Configure the Database

If you need to import a specific database, follow these instructions to import your database into Vagrant.

For new Craft installations, update the environment file inside of Craft. In your editor, open the craft directory and find the .env file. We have to make a couple of edits to the credentials.

# The database username to connect with

DB_USER="homestead"

# The database password to connect with

DB_PASSWORD="secret"

# The name of the database to select

DB_DATABASE="craft"This should now allow you to get into the Craft installation page

http://craft.test/index.php?p=admin/installCredits

- Craft on Homestead, Part 1

- Local Development with Vagrant / Homestead

- Installing PHP 7 and Composer on Windows 10, Using Ubuntu in WSL

- Laravel Homestead with Windows 10 Step by Step setup procedure with explanation.

- Craft 3 Installation Instructions

- Setup Craft CMS on Vagrant Homestead

- Setting Up Craft CMS 3 Local Development with Laravel Homestead

- New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1' [↩]